Independent Verification & Validation

In today's business environment, an application that fails is not just is a bad piece of software, it has an adverse impact on your business. Software quality is created and assured by efficient testing, and testing services have emerged as a critical specialized functional area within the IT domain.

Testing is recognized as a specialized functional area within the development lifecycle that is critical to business success. Experts advocate that testing be conducted independently, and industry best practices include setting up independent testing teams that function autonomously. As a specialized skill, testing is common to all vertical industries and technological domains. By virtue of its high skill focus, non-limiting attributes and independent nature, it lends itself as a prime candidate for outsourcing.

Independent testing on complex and critical applications helps in operating with zero system outages from application failures. The ability to test and validate business applications and software products is vital for helping organisations operate effectively. The main reasons for any application failure is attributed to two aspects, one, being lack of business knowledge and second, poor testing processes. Increasingly companies are realizing the need for a specified outsourced testing "Centre of Excellence" (COE) or Managed Services engagements. COE's enables IT organisations to streamline their delivery effectiveness.

We, in PI Systems, deliver an onsite or offshore or multishore testing engagement models to make our customer applications succeed. Our deep domain expertise in enterprise testing, mission critical applications, software products and real-time systems, can increase the return on applications investment.

helps many organizations to

- Improve application , organisation flexibility and response of testing services to meet business needs

- Reduce overheads associated with recruitment and management of test contract staff

- Increases QA Management Accessibility to a wider pool of specialists and scalability as needed

Integration Testing

Individual software modules are combined and tested as a group. It follows Unit Testing and precedes System Testing. It takes, as its input, modules that have been checked by Unit Testing, groups them in larger aggregates, applies tests defined in an Integration Test Plan and delivers as its output, the integrated system ready for System Testing.

This type of testing is especially relevant to client/server and distributed systems. The purpose of Integration Testing is to detect any inconsistencies between the software units that are integrated together called assemblages or between any of the assemblages and hardware.

There are two methods in integration testing

- Incremental

- Big bang

Component Testing

This involves testing of individual testing components or a set of related components in seclusion. Component Testing is tested before System Testing. Some of the components that may be tested at this stage are:

- Individual functions or methods within an object

- Object Classes that have several attributes and methods

- Composite Components made up of several different objects or functions

System Testing

Refers to testing of the entire system. It can be tested as a whole system against the Business Requirement Specification(s) (BRS) and/or the System Requirement Specification (SRS). It is conducted on a complete integrated system to evaluate the system's compliance with its specified requirements.

As a rule, System Testing takes, as its input, all of the "integrated" software components that have successfully passed Integration Testing. This phase of testing is more of an investigatory testing phase, where the focus is to have almost a destructive attitude and test not only the design, but also the behaviour and even the believed expectations of the customer.

It is intended to test up to and beyond the bounds defined in the software/hardware requirements specification(s). It is the final destructive testing phase before Acceptance Testing.

Compatibility Testing

This type of testing is done to validate how well a software performs in a particular Hardware, Software, OS, and Environment. Compatibility Test Design and execution will be prioritized based on most commonly occurring combinations of OS, Browser and Hardware platform.

Accessibility Testing

Accessibility Testing will ensure that website/intranet or online application is accessible for all types of users, regardless of age, disability or aptitude. This service will ensure that applications built in PI Systems comply to standards such as W3C, WCAG, WAI standards (Priority 1, 2 and 3), Disabilities Act, Section 508 and the 1995 Disabilities Discrimination Act India.

Regression Testing

Refers to the continuous testing of an application for each new release. The regression testing is done to ensure proper behaviour of an application after fixes or modifications have been applied to the software or its environment and no additional defects are introduced due to the fix.

Features Include

- Regressing testing ensures that reported product defects have been corrected for each new release and that no new problems were introduced

- Compare new outputs (Responses) with old (Baselines)

Performance (Load Testing)

This type of testing is carried out to analyse and measure the behaviour of the system in terms of response time, transaction rates and other time sensitive requirements of an application. This is done to verify whether the performance requirements have been achieved.

Performance testing is implemented and executed to profile and 'tune' an application's performance behaviour as a function of conditions such as workload or hardware configurations.

Integration Testing

Individual software modules are combined and tested as a group. It follows Unit Testing and precedes System Testing. It takes, as its input, modules that have been checked by Unit Testing, groups them in larger aggregates, applies tests defined in an Integration Test Plan and delivers as its output, the integrated system ready for System Testing.

This type of testing is especially relevant to client/server and distributed systems. The purpose of Integration Testing is to detect any inconsistencies between the software units that are integrated together called assemblages or between any of the assemblages and hardware.

There are two methods in integration testing

- Incremental

- Big bang

Security Testing

Security testing is performed to assess the sensitivity of the system against unauthorized internal or external access. Testing is done to ensure that unauthorized persons or systems are not given access to the system.

Features include

- Programs that check for access to the system

- Session Hijacking

- Session Reply

- SQL Injection

- Hidden File Manipulation

- Elements of security testing such as Authentication, Authorization, Confidentiality, Integrity and Non-repudiation

User Acceptance Testing

User Acceptance Testing (UAT) is the last phase of a software project and often will be performed before a new system is accepted by the customer. Users of the system will perform these tests which ideally are derived from the User Requirements Specification, to which the system should conform.

The focus is on a final verification of the required business function and flow of the system. The idea is that if the software works as intended and without issues during a simulation of normal use, it will work just the same in production.

This helps many organisations to

- Improve application, organisation flexibility and response of testing services to meet business needs

- Reduce overheada associated with recruitment and management of test contract staff

- Increases QA management accessibility to a wider pool of specialists and scalability as needed

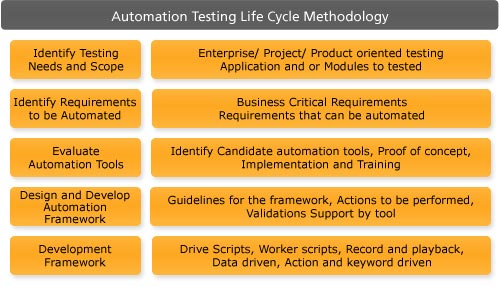

Test Automation

Shrinking cycle times in today’s business environment makes test automation a critical strategic necessity. We understand the challenge of keeping pace with the explosive pace of web enabled application development and deployment, while retaining good test coverage and reduced risk. Usually reduction in project cycle time results in reduction of valuable testing time.

These difficulties can be addressed by our proven processes and deep experience in creating and executing test automation scripts and scenarios to deliver real value. Our test automation services have been designed to derive the maximum benefit from the investment made on the testing tool. Smart scripting and the re-usability of these scripts are some of the key attributes of our test automation approach.

Test Automation Activities

- Test Automation Strategy

- Tool evaluation and Proof of Concept

- Development of an Automation Framework

- Creation of Scripts and Scenarios

- Execution of Scripts and Scenarios

- Maintenance of Scripts and Scenarios

- Quantitative Reporting and Analysis

- Continuous Improvement